A/B Testing Strategy & Implementation Framework

Having led optimisation efforts across multiple e-commerce platforms, I've learned that successful A/B testing isn't just about running experiments, it's about driving measurable business growth through systematic user experience improvements.

Let me share our proven framework that has consistently delivered at least 10-15% conversion rate improvements, with results varying based on specific circumstances.

Strategic Testing Framework Development

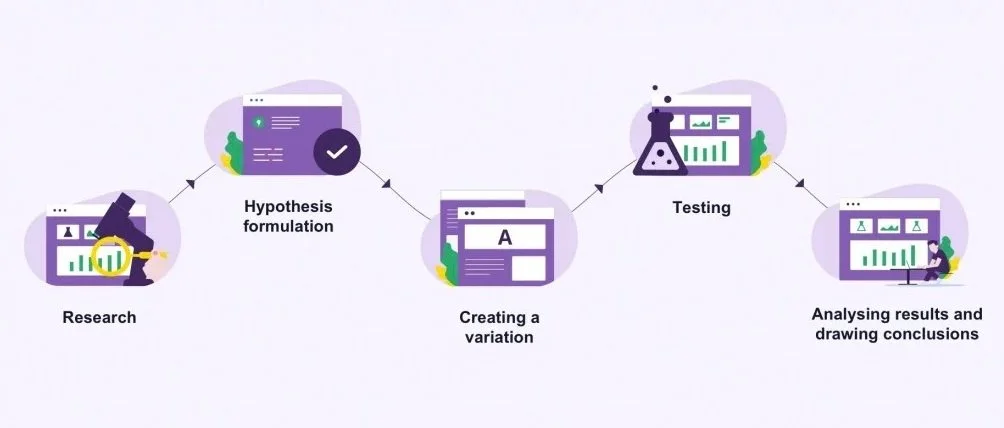

The foundation of our testing program rests on what I call the CRO (Comprehension, Response, Outcome) hypothesis framework, which emphasises not just Conversion Rate Optimisation but also understanding user behaviour and improving overall results. For instance, when we noticed a decline in multi-item purchases over three months, we didn't just jump to solutions. Instead, we developed a structured hypothesis:

Comprehension: Data showed single-item purchasers weren't discovering complementary products effectively, particularly on mobile.

Response: We implemented inline product recommendations at the cart page, specifically targeting returning mobile users with single items.

Outcome: This intervention increased our average order value significantly and boosted the multi-item purchase rate substantially within three months.

Test Prioritisation

We use a modified PIE framework (Potential, Importance, Ease) to evaluate testing opportunities. In practice, this means scoring each test idea from 1-10 on these criteria and prioritising those with the highest combined scores. This approach helped us focus on high-impact tests that significantly increased revenue while maintaining efficient resource allocation.

Implementation Best Practices

Tool selection is crucial. We started with Adobe Target as our primary tool, as it allows for better audience targeting compared to Google Optimize. Adobe Target offers advanced segmentation and personalised experiences, leveraging AI for real-time optimisation. Its robust integration with Adobe Experience Cloud enhances data analysis and marketing automation. The key is matching your tool to your team's capabilities and business needs. We aim for at least an 80% confidence level, depending on the specific case and sample size. Test duration varies based on traffic, typically running for at least 1-2 weeks to ensure reliable results.

Results Analysis and Implementation

Beyond tracking primary metrics, we analyse secondary indicators like Customer Lifetime Value and return rates. One of our most successful tests (e.g., Redesigned product recommendation algorithm) initially showed only a small conversion rate improvement but led to over a 20% increase in Customer Lifetime Value over 6 months.

The Post-Test Debrief Process

Following each A/B test, we hold a structured "Post-test Debrief" session lasting 30-40 minutes, aimed at converting raw data into actionable insights about customer behaviour. This collaborative effort involves our analyst, product manager, UX designers, and relevant teams, ensuring we go beyond mere performance metrics.

Our debrief sessions concentrate on three main areas:

What We Know and Learned: This includes facts, surprises, and key data takeaways.

General Observations: We identify broader trends and unexpected patterns.

Emerging Themes and Hypotheses: Here, we explore what the test suggests for future investigations.

We document everything in a shared online whiteboard that serves as our living repository of experimentation insights. This systematic method has transformed our quarterly planning. Instead of sifting through old documents and Slack messages to recall past tests, we now maintain an organised knowledge base that enhances brainstorming and ensures new experiments build on proven learnings rather than revisiting previous efforts (Adobe Design, 2025).

Remember: Successful A/B testing isn't just about winning tests; it's about building a culture of continuous optimisation. In our experience, even "failed" tests provide valuable insights that inform future improvements. Documentation is paramount in tech work. We maintain a testing wiki (e.g., Atlassian Confluence) that has become an invaluable resource for onboarding new team members and planning future experiments. Through this framework, we've achieved a high test success rate. However, the real value lies not in individual wins but in the cumulative impact of systematic, data-driven optimisation.